The gr(AI)t rift

Is open-source good for AI models and what we can learn from Stable diffusion.

For years now, the hackers ethic has become a norm in Software Development, resulting in what we call today open-source development. Open-source development has been a key factor in the technical boom we have been living through today <source>. However, now that we are moving towards large AI models going mainstream. Should we finally gate keep the technology, or should we trust the collective and keep up the open source momentum.

In this opinion post I will try to figure out where I stand when it comes to open-source large scale AI development.

First off, I need to explain how AI models differ from what we regard as traditional software. Traditional software is deterministic while AI is stochastic. What does that mean?

Well, deterministic systems are those that operate according to pre-defined rules and instructions, with a fixed set of inputs producing a predictable and consistent output. The behaviour of such systems is entirely determined by their programming, and they do not have the ability to adapt or learn from new data or experiences. Traditional software is an example of a deterministic system, as it executes a pre-defined set of instructions to produce a predictable output based on the inputs it receives.

This is what is called a white-box approach, where the internal workings of the system or process being studied are fully known and understood. In other words, in a white-box approach, the individual components of a system are transparent and can be examined and analyzed in detail.

In contrast, stochastic systems are those that are subject to random variation or uncertainty. These systems use probabilistic algorithms and statistical models to analyze data and make predictions, and their behaviour can change over time as they learn from new experiences. AI is an example of a stochastic system, as it is designed to learn from large amounts of data and make predictions based on statistical patterns rather than pre-defined rules. AI can be thought of as a gray-box system. This is because AI systems typically involve both transparent and opaque components.

On one hand, AI systems often use complex algorithms and statistical models to analyze large amounts of data and make predictions or decisions based on patterns that are learned from that data. These algorithms and models can be examined and analyzed in detail using white-box approaches, allowing developers and analysts to gain a deeper understanding of how the system works and to identify potential issues or areas for improvement.

On the other hand, AI systems may also incorporate opaque components, such as neural networks or other machine learning models, that are difficult to understand or interpret using traditional white-box methods. These components may operate in ways that are not fully understood, making it challenging to predict how the system will behave in certain situations or to diagnose issues that may arise.

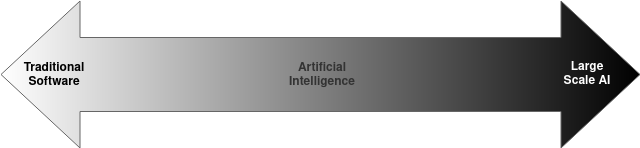

With that in mind, we can understand the state of current software development in the following context:

This software spectrum becomes more powerful and useful as it becomes less understood and convoluted. The far end of the spectrum (purely black box) is where we find Large Scale AI models. These are the models that I am most interested in forming an opinion about.

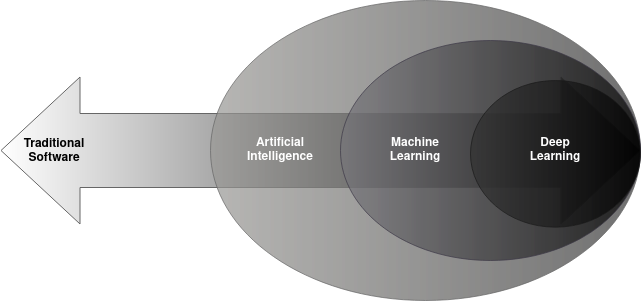

The Large Scale AI models can currently be broken down as follows:

- Artificial Intelligence, AI encompasses a wide range of techniques and applications for developing intelligent systems that can perform tasks that typically require human intelligence.

- Machine Learning, ML is a subset of AI that involves training algorithms to learn from data and make predictions or decisions based on that data. ML algorithms can be implemented using a white, gray, or black box approach.

- Deep Learning, DL is a subset of ML that uses deep neural networks to analyze and recognize patterns in data. Due to their large nature, DL models can only be implemented using black box approach. However, we can always try to understand what's in that black box, making it a little bit grayer.

History of OpenAI

History of StabilityAI

The technical barrier of entry vs api