Intro. to self driving cars (1/2)

Self driving cars are the holy grail of robotics. So, how does a car become automated?

20 years ago the future of cars was always imagined as flying cars. Come 2022 this is no where near what our future looks like (although urban air mobility is in the rise). Today our future looks less dystopian yet more automated. As all industries move to automate things using robotics, the automotive industry is no exception to that.

So, whats a self driving car? A self driving car / an automated vehicle is just a robot. A robot on wheels, that also happens to carry us around. Why is that distinction important? Well, because automated cars came in the picture after the electrification of cars, turning the conventional 'combustion-based' design into an electric systems design. And due to the nature of electric systems, they are easier to automate.

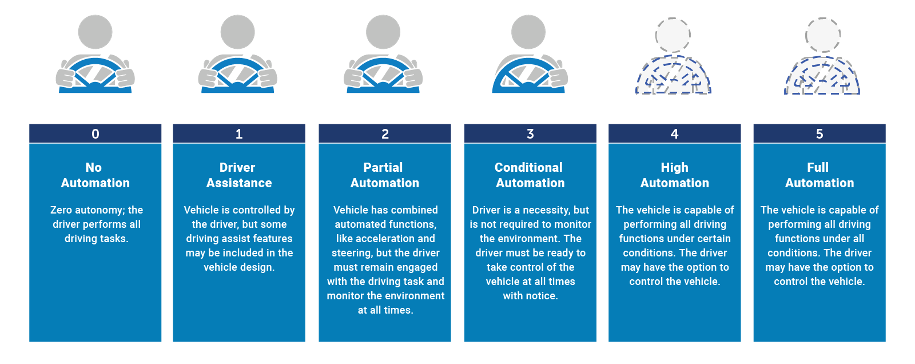

Now, what is automation in the context of cars. And how do we determine if a car is a self driving car? SAE (the society of automotive engineers) created a Standard to answer that question, standardizing how the industry defines/addresses automated vehicles.

Here we can better define the main stream notion of self driving cars as Level-5 automation according to the J3016 standard. However the industry is currently between level's 3 and 4.

If we break it further down, we can understand what is currently missing and how we can reach Level-5.

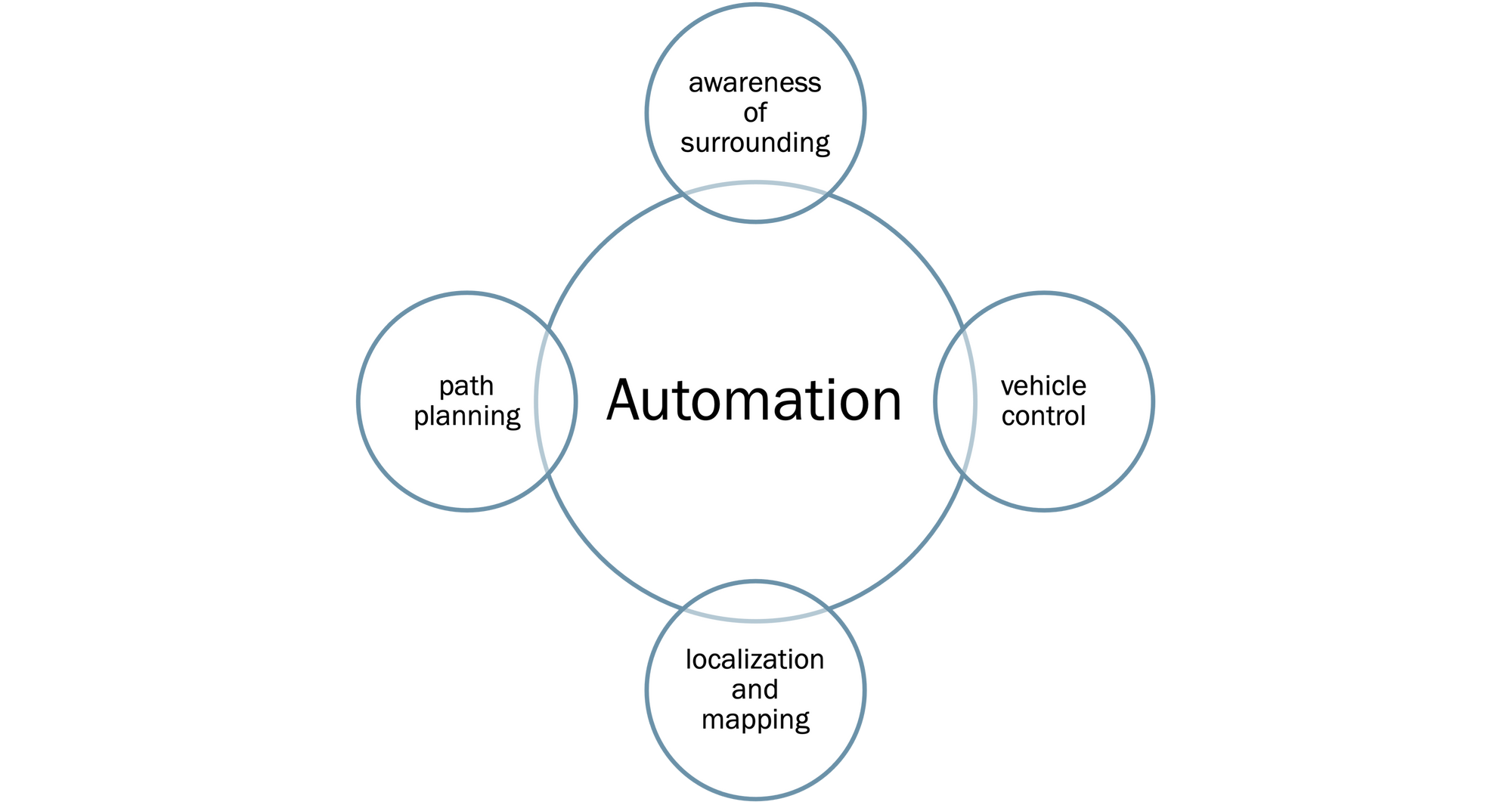

Automation in a vehicle context, can be broken down into 4 components: Awareness of surrounding, vehicle control, localization and mapping, and path planning.

To understand this in further detail, lets go through a thought experiment. What would you do if you were dropped in an unknown place?

1. Look at surroundings (Awareness of surrounding)

2. Try to locate where you're at, maybe look at a map (Localization and mapping)

3. From the map you will define a destination and plan your path (Path planning)

4. You will move from your current position to your destination following the path (Vehicle control)

Robots follow this framework, where they try to understand and react according to calculations done on a computer. So, following this framework, how can we achieve awareness of surroundings when it comes to robotics?

Awareness of surroundings

Like human beings, robots must achieve some sort of "sense" to understand their surroundings. While we use our 5 senses. Robots use electronics that are called... sensors.

Sensors, as electronics, can be broken down into 2 categories: Passive and Active.

Passive sensors (like visible spectrum cameras or infrared cameras) are sensors that do not interact with the environment, i.e., they just wait to receive some input from the surrounding. In that context, our eyes can be categorized as passive sensors along with cameras.

This means that this type of sensing is really dependent on the environment. In other words, we can't see in the dark, or when its foggy. The same can be determined for robots that use visual feeds. And that is one of the major downfalls of camera-based awareness (the other is computational intensity).

Active sensors however produce their own signals to interact with the environment and understand their surroundings. This is mostly done by "Detection and Ranging" which is what animals that use echo-location do. Now animals do it using sound that they produce, robots do it using active-Sonar (Sound detection and ranging). With that concept in mind, humans developed 2 other Detection and Ranging techniques for Radio and Light emitting sensors, creating RaDAR (Radio Detection and Ranging) and LiDAR (Light Detection and Ranging).

Currently, LiDAR is thought to be the state of the art method for understanding the 3-dimensional space around a robot.

Now that we understand our environment how do we react to it?

In part 2 of this introduction, we will go into Localization and Mapping along with Path Planning.

Real-time Control

What's real-time control? Arguably the most important thing in robotics. But Since sensors take time to collect information, the computer takes time to process that information, how do we define Real-time?

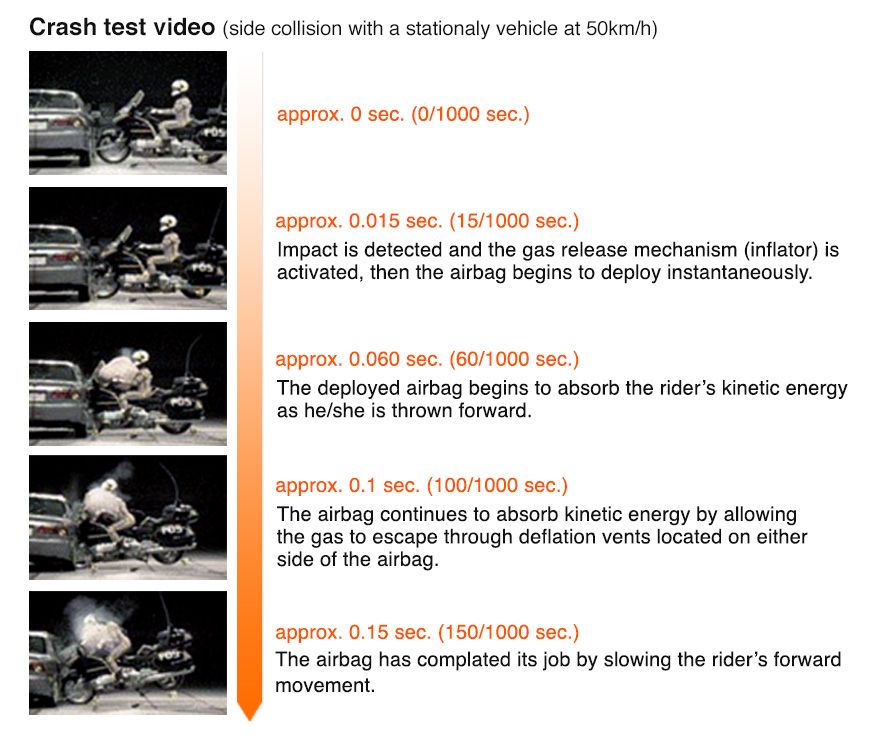

Real-time is generally defined as 'The ability to finish a task within a time boundary'. A very good example of that is the deployment of airbags. As they have to be deployed in a very small time-frame, any earlier or later than that time-frame could result in catastrophes and loss of life.

So how is that very precise deployment achieved? Using electronics of course. However these electronics are programmed to finish their tasks within the 0.015 sec and 0.060 sec time boundary to start absorbing the rider's kinetic energy and save their life. This time boundary is what makes the system real-time.

This airbag deployment system is just one form of real-time control (maybe we'll have a deep dive into real-time control systems in a future blog). However, this is the beating heart of robotics - interestingly enough pace makers are another example of real-time control - making they're use very abundant and important. Even sensors that are used to infer real-time reactions are usually real-time controlled, like the periodicity of Detection and Ranging sensors.

Now, if the sensors are analogues to the human senses and the real-time control is analogues to the beating heart, what's the brain analogue to? And how does everything come together?

Embedded Systems

Well, the brain analogues to a computer. A computer in this context is a chip that does mathematical operations. So whats an embedded system, it's defines as follows:

An embedded system is a computer system — a combination of a computer processor, computer memory, and input/output peripheral devices — that has a dedicated function within a larger mechanical or electronic system. It is embedded as part of a complete device often including electrical or electronic hardware and mechanical parts.

With that definition, we can say that a computer that controls a car is definitely an embedded system as it has the 4 criteria satisfied

1. Computer processor

2. Computer memory

3. I/O peripherals

4. Larger electrical/mechanical system

Here, we can introduce an embedded system that is already within all cars on the market at the moment. The Anti-lock Braking System (ABS) which allows for real-time control of the car braking, to allow for skidding in situations where the complete locking of the wheels imposes a danger on the driver.

An excerpt from the book "Real-time Embedded Systems" by Jiacun Wang, summarizes the ABS as a Real-time embedded system:

A real-time embedded system interacts with its environment continuously and timely. To retrieve data from its environment – the target that it controls or monitors, the system must have sensors in place. For example, the ABS has several types of sensors, including wheel speed sensors, deceleration sensors, and brake pressure sensors. In the real world, on the other hand, most of the data is characterized by analog signals. In order to manipulate the data using a microprocessor, the analog data needs to be converted to digital signals, so that the microprocessor will be able to read, understand, and manipulate the data. Therefore, an analog-to-digit converter (ADC) is needed in between a sensor and a microprocessor.

The brain of an embedded system is a controller, which is an embedded computer composed of one or more microprocessors, memory, some periph- erals, and a real-time software application. The software is usually composed of a set of real-time tasks that run concurrently, may or may not be with the support of a real-time operating system, depending on the complexity of the embedded system.

The controller acts upon the target system through actuators. An actuator can be hydraulic, electric, thermal, magnetic, or mechanic. In the case of ABS, the actuator is the HCU that contains valves and pumps. The output that the microprocessor delivers is a digit signal, while the actuator is a physical device and can only act on analog input. Therefore, a digit-to-analog conversion (DAC) needs to be performed in order to apply the microprocessor output to the actuator.

Note here that the statement about actuators shows that automated cars do not have to be completely electrical. However, it's just easier to have an entirely electric system whenever possible in robotics.

With that in mind, can we "hack" an off-the-market car and turn it autonomous? The short answer is yes. The complete answer however has a lot more nested questions. To understand these questions and the challenges we could face let's walk through the design cycle of a "moving robot".

This robot is called 'Sentinel' a fire detecting robot that I designed and developed with 4 other engineering students as part of our capstone project.

Part 2 of this introduction will start introducing the algorithms that allow for automated behavior as well as the compute power required and this will be done by explaining the design process of project Sentinel. Stay tuned.

Update to the post, July 29

This video shows a very thorough insight into the current state of the industry, and where it might be heading.